Show me the money ... the cash ... the cache

CloudGuruChallenge: Improve application performance using Amazon ElastiCache

Goal

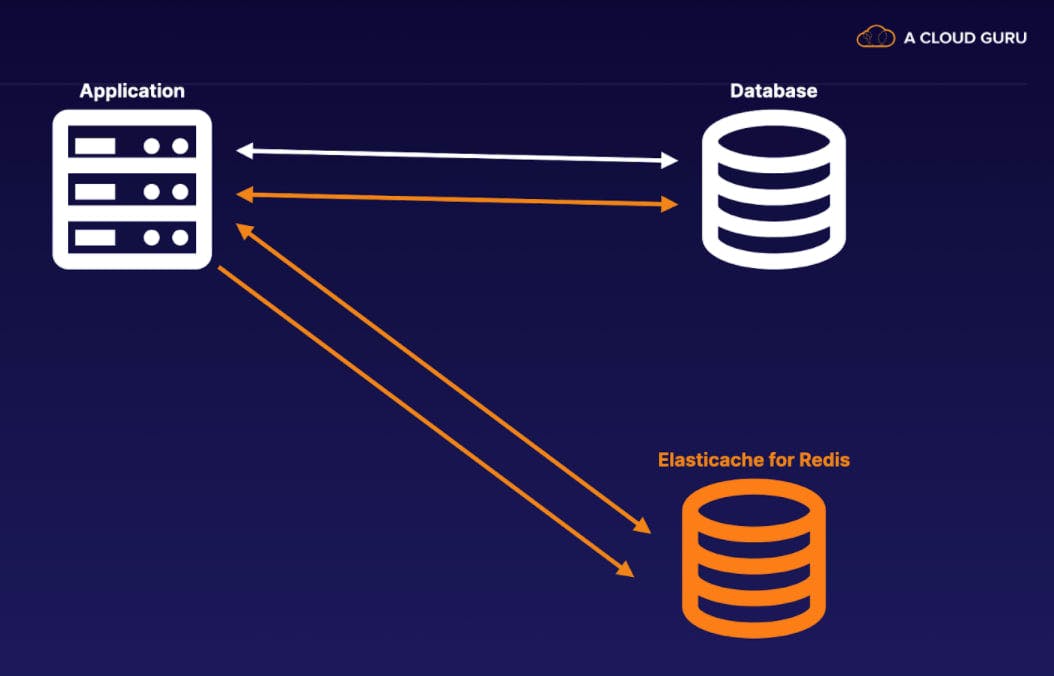

The goal of this project was to observe a significant improvement in the performance of a web application by implementing a Redis cluster using Amazon ElastiCache to cache database queries in a simple Python application.

Project Description

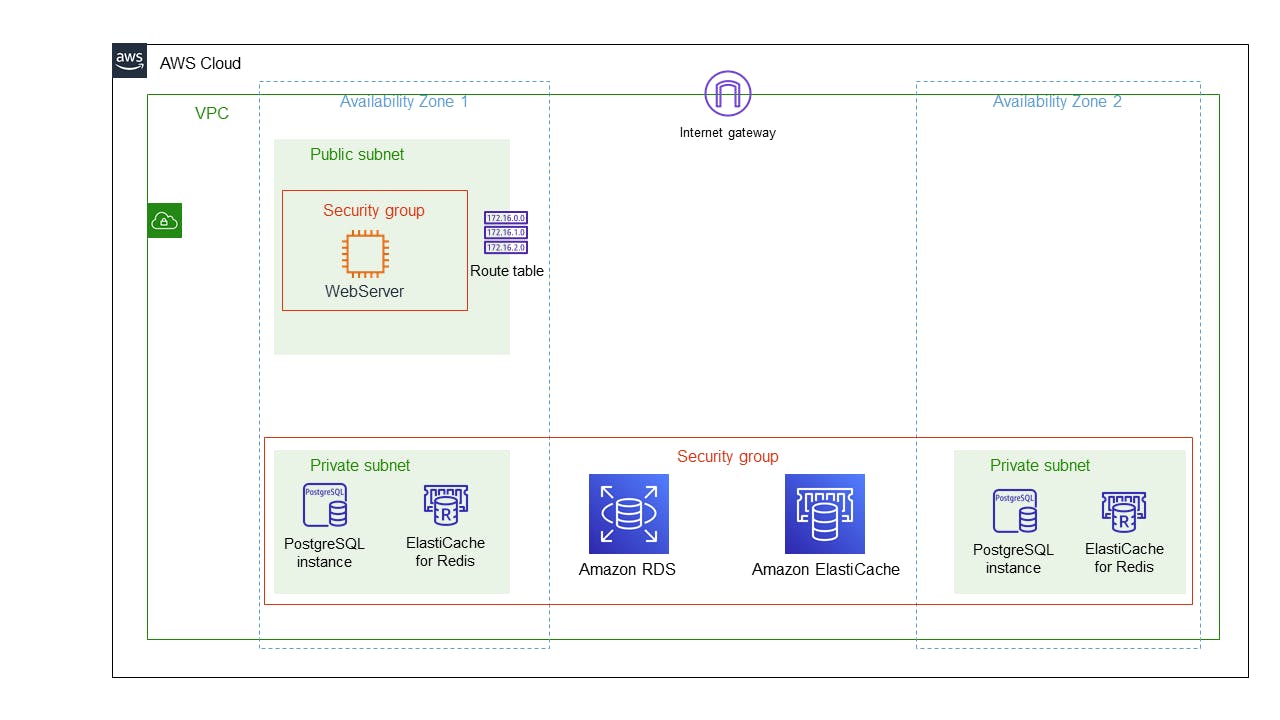

This project has two main steps. First, implement a LAMP style web application using Linux, NGINX, PostGreSQL and Python. Measure the performance of a Python application that is intentionally made slow. Second, add an Redis ElastiCache to the web application and observe the improvement in performance. There are two Cloudformation templates that create the architecture. The first template, postgres-rds.yaml, creates these resources:

- Custom VPC

- Two Private Subnets in two AZs for Database and Redis cache

- One Public for nginx webserver

- Internet Gateway

- Public Route Table

- Public Network ACL

- Two Security Groups (Web and DB)

- RDS PostgreSQL Database

- Webserver EC2 Instance (requires EC2 key pair which are region based)

- Database Migration Service (DMS) IAM CloudWatch Role

The second Cloudformation template, redis-cache.yaml, imports four Outputs from the postgres-rds.yaml Cloudformation template and creates these resources:

- Custom VPC

- WebServer Security Group

- Private Subnet 1

- Private Subnet 2

Main Steps

Using CloudFormation, create a LAMP style web application using Linux, NGINX, PostGreSQL and Python. This Cloudformation template is found in the github repository. Login to the NGINX Webserver instance and confirm all the UserData installations have been completed successfully. On the NGINX webserver CLI, clone the challenge git repository:

# cd /home

# git clone https://github.com/ACloudGuru/elastic-cache-challenge

Modify the config\database.ini file with the RDS Primary EndPoint, Database Name, Database Username and Database Password. Test Database Connectivity on CLI of Nginx webserver

# export PGHOST= rd1q5tnfnbcu9jm.cqaalqhinv4o.us-east-1.rds.amazonaws.com

# psql -d lordsdb -U lordofthecastle -h $PGHOST

\q

On the NGINX webserver, run the SQL commands found in the install.sql file

# psql -d lordsdb -U lordofthecastle -h $PGHOST -f install.sql

The previous command should return CREATE FUNCTION. On the NGINX webserver, start the Python web application.

# python2.7 app-pre-redis.py

* Serving Flask app "app-pre-redis" (lazy loading)

* Environment: production

WARNING: This is a development server. Do not use it in a production deployment.

Use a production WSGI server instead.

* Debug mode: off

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)

From a browser, test the Python web application with address of http://{Public IP of EC2 Instance}/app, e.g. 54.211.76.71/app Should see something like: DB Version = PostgreSQL 12.5 on x86_64-pc-linux-gnu, compiled by gcc (GCC) 4.8.3 20140911 (Red Hat 4.8.3-9), 64-bit Measure the performance using an Instance in the EU-West-1 (Ireland) region.

# export APP_URL=http://54.211.76.71/app

Run one test:

# curl -L $APP_URL

Run multiple tests:

# time (for i in {1..5}; do curl -L $APP_URL;echo \n;done)

Record the output for later comparison with Redis Cache result. On the NGINX webserver, stop the Python web application. Using CloudFormation, add an Redis ElastiCache to the web application. This Cloudformation template is found in the github repository. The redis-cache.yaml template with create the Redis Elasticache permitting access from the Nginx webserver. Test Redis Cache connectivity from CLI of Nginx webserver: Interactive Python Interpreter

# python2.7

import redis

client = redis.Redis.from_url('redis:// nginx-cache.r0xhe9.ng.0001.use1.cache.amazonaws.com:6379')

client.ping()

(result will be displayed, hopefully it is 'True')

<Ctrl>D (to exit)

Modify database.ini Add [redis] section to database.ini and redis_url within that section. For example:

[redis]

redis_url=redis://rds-cache.r0xhe9.ng.0001.use1.cache.amazonaws.com:6379

On the NGINX webserver, start the modified Python web application that detects a cache miss and writes SQL results into the Redis Cache after a cache miss.

# python2.7 app-post-redis.py

* Serving Flask app "app-post-redis" (lazy loading)

* Environment: production

WARNING: This is a development server. Do not use it in a production deployment.

Use a production WSGI server instead.

* Debug mode: off

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)

Measure the performance a second time using the same Instance in the EU-West-1 (Ireland) region. What I observed is that the result of the first query takes about 5 seconds to return when there is a cache miss. I set the Time To Live (TTL) of my cache to 15 seconds and so if I run the queries again before the TTL had expired then I would get the five results returned with one second compared to 25 seconds when there was no cache.

Results

Five queries of web application without cache

Elapsed time: 5.04091s

Elapsed time: 5.04651s

Elapsed time: 5.04148s

Elapsed time: 5.05322s

Elapsed time: 5.02803s

real 0m25.914s

user 0m0.019s

sys 0m0.011s

Five queries of web application with cache where TTL has expired

Elapsed time: 5.04306s

Elapsed time: 0.00334s

Elapsed time: 0.00339s

Elapsed time: 0.00345s

Elapsed time: 0.00320s

real 0m5.783s

user 0m0.021s

sys 0m0.009s

Five queries of web application with cache where TTL has not expired

Elapsed time: 0.00327s

Elapsed time: 0.00536s

Elapsed time: 0.00320s

Elapsed time: 0.00356s

Elapsed time: 0.00327s

real 0m0.727s

user 0m0.028s

sys 0m0.001s

Implemented Architecture

The diagram below depicts the architecture I implemented. The entire architecture was created by two CloudFormation templates.

Learning References

The ElastiCache learning reference the taught me the most and, without which, I probably would not have completed this project was: Lab: Using ElastiCache to Improve Database Performance

Conclusion

This was an enjoyable project and one of the important lessons I learned was implementing a Redis Cache is much simpler than I had imagined. The impact of the cache was substantial reducing the response time of five queries from 25 seconds to under 1 second.