Image Analysis Web Application Using 3 Cloud Service Providers

I invite you to read my solution to the A Cloud Guru Multi-Cloud Madness challenge Scott Pletcher's Multi-Cloud Madness challenge .

Multi-Cloud Challenge

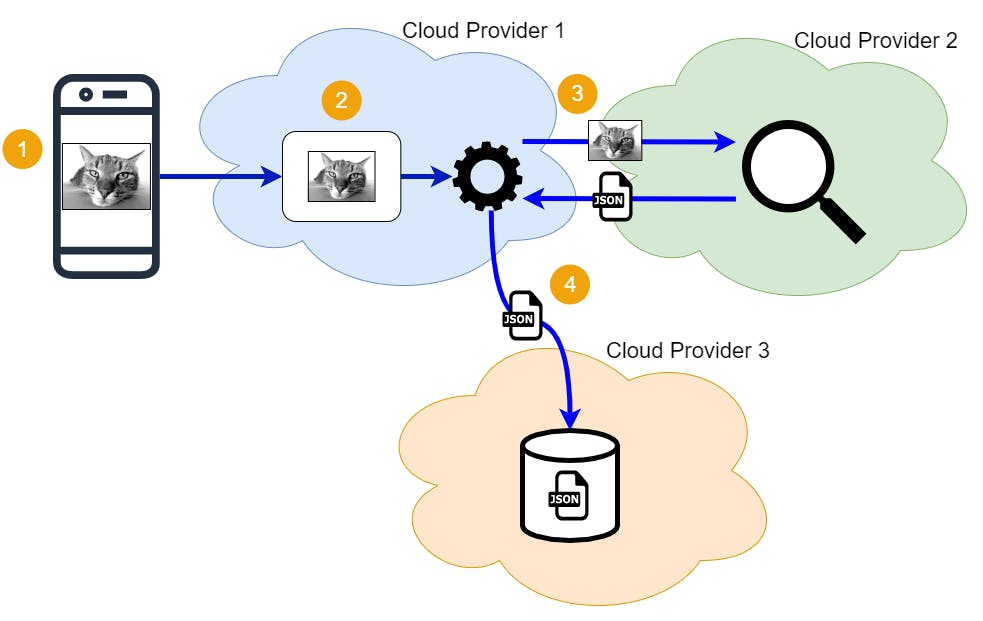

This informative picture (once you get past the cats) is the diagram Scott Pletcher included to visualize the challenge:

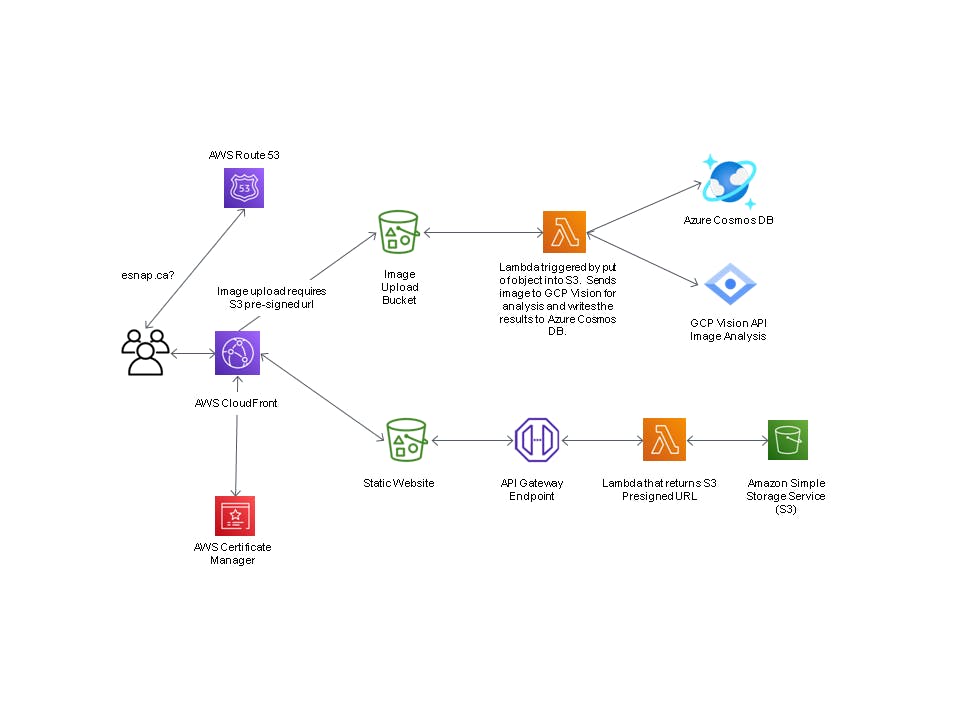

and here is the solution that I implemented in response to this challenge:

Goal

The goal of this project was to design and build an image upload and recognition process using no less than three different cloud providers. As you will read, the project goal was achieved.

Outcome

In completing this project I was able to gain practical hands-on experience with cloud providers with whom I do not normally work.

Main Steps

This project was completed in two phases. In Phase I the entire solution was implemented on AWS. In Phase II the Phase I solution was broken into three pieces and implemented on three different cloud providers. Here are the four main steps of Phase II:

- Create a simple web page that takes a picture via a mobile device or computer webcam.

- Save that picture to a storage service on Cloud Provider 1.

- Upon saving that image, trigger a serverless process that calls out to an Image Recognition service on Cloud Provider 2.

- Take the metadata that was received back from the Image Recognition service and store it, along with a URL path to the original image into a NoSQL database on Cloud Provider 3.

Solution

AWS, Azure and GCP were the three Cloud Service Providers (CSPs) used to complete this project. AWS is Cloud Provider 1, GCP is Cloud Provider 2 and Azure is Cloud Provider 3. AWS was used for the CloudFront CDN, Route 53 DNS, S3 serverless hosting of the website, S3 storage and the Lambda functions. GCP Vision was used for its Vision API Image Recognition service and Azure was used for its Cosmos DB NoSQL database.

These requirements were implemented on AWS Services:

- Two S3 buckets:

- serverless website hosting contents bucket

- configured as Public

- configured for Static website hosting

- upload bucket to receive the picture uploads

- CORS configuration

- configure to trigger the second Lambda function

- serverless website hosting contents bucket

- HTML, jQuery and CSS that receives the S3 Pre-Signed URL and uploads the picture object to the S3 upload bucket

- Four Lambda functions, three of which are deployed using AWS Chalice:

- the first Lambda function generates the S3 Pre-Signed URLs to PUT the image object

- deployed using Chalice to automatically create API REST API trigger and IAM role

- IAM Policy json file for S3 Get Object, Put Object and Put Object ACL

- Environment variables for S3 upload bucket name and region name in the config.json file

- deployed using Chalice to automatically create API REST API trigger and IAM role

- the second Lambda function that is triggered by S3 whenever a picture is uploaded and then communicates with the GCP Vision to perform the Image Recognition and outputs the GCP Vision image analysis results to CloudWatch Logs and will be sent to Azure CosmosDB NoSQL tasks.

- the third Lambda function returns the id, image_fname (primary key) and _ts (timestamp) of all items from the container in the Azure Cosmos DB

- deployed using Chalice to automatically create an API REST API trigger and associated IAM role

- IAM Policy json file for S3 Get Object, Put Object and Put Object ACL

- Environment variables for S3 upload bucket name and region name in the config.json file

- The returned data (id, image_fname and _ts) is displayed using JavaScript in a HTML table

- deployed using Chalice to automatically create an API REST API trigger and associated IAM role

- the fourth Lambda function returns all data of one item from the Azure Cosmos DB based on the id and primary key

- deployed using Chalice to automatically create and API REST API trigger and associated IAM role

- IAM Policy json file for S3 Get Object, Put Object and Put Object ACL

- Environment variables for S3 upload bucket name and region name in the config.json file

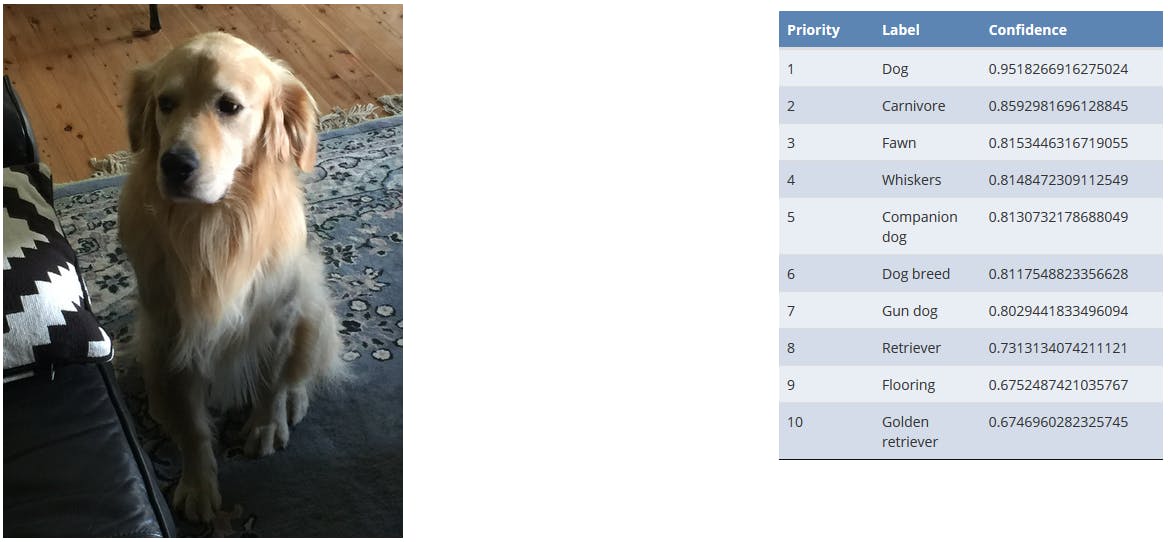

- The GCP Vision API score portion of returned data is displayed alongside the actual image using Javascript in a HTML table

- deployed using Chalice to automatically create and API REST API trigger and associated IAM role

- the first Lambda function generates the S3 Pre-Signed URLs to PUT the image object

The following tasks were implemented on GCP and Azure:

- Configured the GCP Vision Image Recognition Service

- Configured the Azure CosmosDB NoSQL database

It was necesary to register a DNS domain name with Route 53 and use the CloudFront CDN in order to use the navigator.mediaDevices Mozilla Web API which provides access to connected media input devices like cameras and microphones, as well as screen sharing.

| Cloud Services Used | Reasons |

| AWS API Gateway | Trigger REST API Lambda function that returns the S3 Pre-Signed URL |

| AWS Certificate Management Service | SSL for navigator.mediaDevices Mozilla Web API |

| AWS CloudFront Service | https to S3 Static webpage for navigator.mediaDevices Mozilla Web API |

| AWS Lambda | S3 Presigned URL Generation and interact with Azure and GCP for image analysis |

| AWS Route 53 | Create domain that can be used with CloudFront to route SSL (HTTPS) traffic to HTTP S3 Static website |

| AWS S3 | Buckets for hosting static web content and receiving image uploads |

| Azure | CosmosDB NoSQL Database |

| Chalice | Deploy a Lambda function with API Gateway Trigger, IAM permissions and environment variables |

| GCP | Vision API Image Recognition |

Example Output

New Knowledge Acquired

In completing this project I learned several new cloud technologies. The first was Chalice with which I am very impressed and I think is best described by this quote, “AWS Chalice is a Python Serverless Microframework for AWS and allows you to quickly create and deploy applications that use Amazon API Gateway and AWS Lambda.” — Alex Pulver, AWS Developer Blog.

Other things learned were the GCP Vision API, Azure Cosmos DB and although I was familiar with them, I gained much more confidence in the use of AWS API Gateway, AWS CloudFront, AWS Route 53 and AWS Certificate Management Service.

Solution Artifacts

Gotchas and Lessons Learned

Starting this project I had three AWS certifications (Solutions Architect, Developer and SysOps). However, I had not worked with any other CSPs. Therefore, I needed to learn GCP Vision and Azure Cosmos DB. In addition, generating and using the S3 Pre-Signed URLs to upload the image files proved to be a challenge. Using JavaScript required me to learn AJAX, Callbacks and Promises and I have lots more to learn in this regard.

One gotcha was encountered after I decided to use the Mozilla navigator.mediaDevices API. One of the design requirements was to produce and entirely serverless solution. Therefore, I had decided to host my website on S3. A static website hosted on S3 can only be servered via http. However, the navigator.mediaDevices API can only be used from https. This necessitated me adding Route 53, CloudFront and AWS Certificate Manager (ACM) to the mix in order to access the S3 website using https.

Test Drive

You can find my creation at Brian's Multi-Cloud Image Analysis Project

The website may not function because cloud having a pay as you go fee structure means I will incur costs if I leave the solution up indefinitely. Therefore, with the goal of cost avoidance I will have shutdown some of the cloud resources associated with this project sometime in February 2021.

Conclusions

This is the third A Cloud Guru challenge I have completed and even though I have three AWS certifications I have learned much more through completing these challenges. It has been a very valuable experience and I encourage anyone who wants to improve their Cloud Architect skills to complete these challenges.

Thanks Scott for this challenge.